Artificial Intelligence and Its Limits

As distinct from fear-mongering about “Terminator,” thinking about Artificial Intelligence and its future appears to be entering a new, more sober phase. Google recently released a blog post, and a backing research paper, detailing their concerns over AI safety. New research will concentrate on five broad areas:

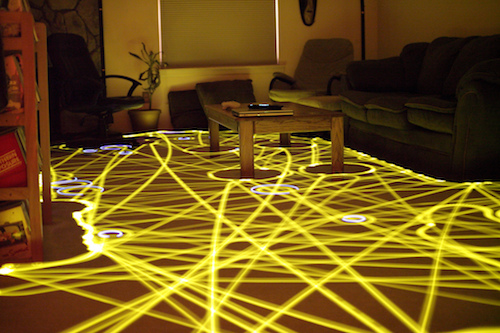

Avoiding Negative Side Effects: How can we ensure that an AI system will not disturb its environment in negative ways while pursuing its goals, e.g. a cleaning robot knocking over a vase because it can clean faster by doing so?

Avoiding Reward Hacking: How can we avoid gaming of the reward function? For example, we don’t want this cleaning robot simply covering over messes with materials it can’t see through.

Scalable Oversight: How can we efficiently ensure that a given AI system respects aspects of the objective that are too expensive to be frequently evaluated during training? For example, if an AI system gets human feedback as it performs a task, it needs to use that feedback efficiently because asking too often would be annoying.

Safe Exploration: How do we ensure that an AI system doesn’t make exploratory moves with very negative repercussions? For example, maybe a cleaning robot should experiment with mopping strategies, but clearly it shouldn’t try putting a wet mop in an electrical outlet.

Robustness to Distributional Shift: How do we ensure that an AI system recognizes, and behaves robustly, when it’s in an environment very different from its training environment? For example, heuristics learned for a factory workfloor may not be safe enough for an office.

Each of these is sound; the sum improves sensibility and precision. Failure in any of them will limit what we can accomplish with these machines. Yet, they strike me as optimistic and incomplete. Successful AI, for Google and others, implies autonomous machines, machines designed to replace humans. That’s reflected in the problems they list. But artificially intelligent machines are not required to work autonomously. And that distinction matters.

In an earlier post, I mentioned the Dow Flash crash from a few years ago. The crash represents one type of problem not covered by Google: the “cliffs” inherent in all computing. Machines are, more or less, rule-driven, their essence is digital: Something is on or something is off. As writer Zulfikar Abbany notes:

[Humans] observe degrees of truth, fuzzy logic. Computers don’t get confused, they either do or they don’t do, according to the instructions in the software that runs them. This may make it easier for self-driving cars to react faster in dangerous situations. But it doesn’t mean they will make the right decision.

When computers work, they work consistently well; but when they fail, they can fail catastrophically. Human performance, by contrast, degrades slowly. An inebriated driver’s skill gets increasingly poor depending on how much alcohol is in their system. It’s not as if with one drink too many, drivers careen out of control. But when computers, through the data received, wander past boundaries of their design, they do not produce results whose badness can be measured by how far they’ve wandered; they simply fail. If declines in human behavior tend to slide down gentle, descending hills, declines in the behavior of computer programs more often skid over an edge with a very deep bottom.

Facebook drew attention, unintentionally, to another problem inherent in all computer programs but magnified by those trained with data and left to “decide” on their own. The problem is bias. Computer programs, even those that power AI machines, are conceived, designed, and developed by humans. And each program contains hundreds of thousands of interlocking decisions whose combined effect determines the outcome. Even self-adjusting programs, like those AlphaGo used, contain rules whose parameters get tweaked based on their training data. When that data is rigid, such as a winning game board from the game Go, it matters little; but when that data is looser, such as photographs of good-looking people, it is highly subjective. With rules-bound subject matter, such as accounting and games, bias is less likely to affect the computer’s results because the rules are fixed — there are clearly right moves and clearly wrong moves. But when the subjects are interesting and complex, all those areas where we’d like to use artificially intelligent machines, bias impacts, if not determines, the result.

Yet even if we eliminate bias and keep our machines away from cliffs, one problem remains that the computing industry has yet to solve: plain, old mistakes. None of the software we use in so much of our lives is free of errors: not your iPhone, not your PC, not even your ATM machine. Every program ever written contained bugs and every program in use contains bugs. Thankfully, if the program is small enough and its use sufficiently restricted, we can constrain their effects so they are unlikely to cause harm. But large programs, especially those to which AI’s advocates want to cede control, abide by Murphy’s Law: “If anything can go wrong, it will.” And they frequently adopt MacGillicuddy’s Corollary: “At the most inopportune time.” AI promoters express surprise at the output of their creations, as if an unexpected result were the mark of true intelligence. It’s not. It is, instead, a sign of our inability to predict what large complex things will do. And, if the expected results surprise us, how well could we distinguish a surprising correct result from that caused by a programming error?

So, what do we do? Do we not pursue AI? Do we return to the days of the abacus? I suggest we do the obvious: Use machines for what they do well and humans for that which they do well. And, despite the hype, at least some Silicon Valley investors are exploring the same approach, as Facebook’s M project shows. Machines are good at repetition and precision, at dealing quickly with that they’ve seen before and repeating what they’re told. They’re lousy, or at least unpredictable, at handling the unexpected. Humans, on the other hand, handle the unexpected, if not well, at least gracefully. We also make more mistakes with rote and repetitive tasks.

We should not, then, deploy autonomous machines to replace humans, but use artificially intelligent machines to assist humans. Let’s use Deep Learning machines to assist radiologists in analyzing MRIs and mammograms. Let’s use self-learning machines to monitor for fraudulent transactions (to be reviewed, in turn, by humans). Let’s use pattern-matching machines to ferret out possible plagiarism (to be addressed, in turn, by a human editor). Let’s capture, as best we can, what others have learned and make it available for others to use.

But let’s stop fooling ourselves: AI is not the emergence of another intelligence. And it’s certainly not one we should allow to wander on its own without human oversight. Computers are just machines. Machines designed, built, and programmed by something more than mere machine: The human mind.

Photo: Paths taken by cleaning robot in 45 minutes, by Chris Bartle [CC BY 2.0], via Wikimedia Commons.