Group Delusions Aside, Sentient Robots Aren’t on the Way

Our colleague Wesley Smith commented yesterday on a piece in Nature, “Intelligent robots must uphold human rights.” We read there:

There is a strong possibility that in the not-too-distant future, artificial intelligences (AIs), perhaps in the form of robots, will become capable of sentient thought. Whatever form it takes, this dawning of machine consciousness is likely to have a substantial impact on human society.

More:

Academic and fictional analyses of AIs tend to focus on human — robot interactions, asking questions such as: would robots make our lives easier? Would they be dangerous? And could they ever pose a threat to humankind?

These questions ignore one crucial point. We must consider interactions between intelligent robots themselves and the effect that these exchanges may have on their human creators. For example, if we were to allow sentient machines to commit injustices on one another — even if these ‘crimes’ did not have a direct impact on human welfare — this might reflect poorly on our own humanity. Such philosophical deliberations have paved the way for the concept of ‘machine rights’.

“Machine rights”? I have to confess, when I read articles like this I have an almost visceral reaction. It amounts to a full-blown, ongoing perplexity and fascination with the capacity of otherwise intelligent people to engage in serious-sounding group delusion. To pick this apart is a little like explaining to someone why his interest horoscopes is probably untethered to any genuine scientific knowledge about the planets, the relevant laws governing their motion, and so on. I’m tempted to say, “Yes, that’s right, the robots are becoming intelligent so quickly that they may soon take control. In fact, here they come now! Run for cover!”

“Machine rights”? I have to confess, when I read articles like this I have an almost visceral reaction. It amounts to a full-blown, ongoing perplexity and fascination with the capacity of otherwise intelligent people to engage in serious-sounding group delusion. To pick this apart is a little like explaining to someone why his interest horoscopes is probably untethered to any genuine scientific knowledge about the planets, the relevant laws governing their motion, and so on. I’m tempted to say, “Yes, that’s right, the robots are becoming intelligent so quickly that they may soon take control. In fact, here they come now! Run for cover!”

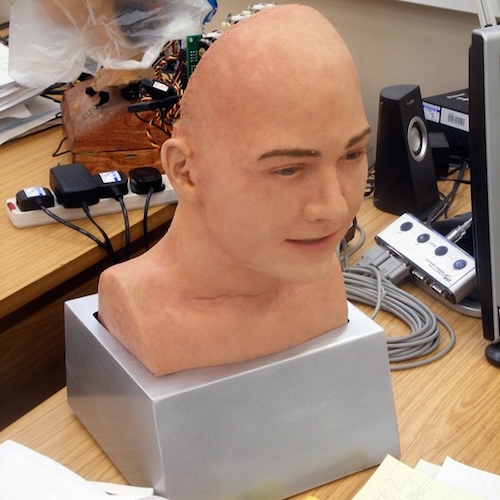

Let’s do some not-too-painful sanity checking. What is the current state of robot technology? MIT is famous for cutting-edge work on robots (cf. Rodney Brooks), and they’ve got a robot that is getting better at identifying large objects like plates, as distinct from, say, a salad bowl.

Meanwhile in manufacturing, the latest three-hundred-pound gadget designed to grab and manipulate plastic components (I gather) is getting so smart than when a component topples over, it can move it’s claw over to pick it up.

The serious point here is that the current state of robotics tells a different tale from robots “coming alive” in the near future and taking control of human society. The technical challenges to simulating actual human intelligence are vast and daunting. If fluff articles from no less than Nature must continue to bombard hapless readers with sci-fi fantasies about the coming intelligence revolution, I suggest they should first offer the simplest form of evidence apart from sheer rhetorical emotivism.

For example, let’s have a URL to an article describing an actual robotic system, leveling with the reader on the actual capabilities of the system. I offer bonus points for explaining how apparently simple problems like moving around in a dynamic environment — a street corner! — confounds current systems. I offer even more bonus points for explaining why the supposedly smart robot can’t understand your easy conversational banter with it — at all.

AI systems (robots’ brains) and full robotic systems suffer from two limitations that show no signs of going away, now or in any foreseeable future. First, there is the inability to keep track of aspects of their environment that become relevant as a function of time — dynamic environments, where things change as the robot moves through it, in other words, the real world. Second, there is the inability to keep track of aspects of language that keep changing as a function of time — conversation, in plain and simple everyday terms.

The Turing Test has been debated since the inception of AI in the 1950s, and to date it shows no sign of yielding to gargantuan increases in hardware performance via Moore’s Law, or advances in algorithmic techniques, such as convolutional neural networks, or so-called Deep Learning. An enthusiast with the stripes of, say, Ray Kurzweil overwhelms the uninformed with scientific-looking graphs showing exponential progress toward superintelligence. Yet a simple graph of improvements on the Turing Test over the years would be decidedly flat, and downright embarrassing.

I wonder what Bill Gates or Elon Musk or any other luminary enthralled with the current rhetoric about a coming AI would say to such a graph? The smart money is on looking at the problem without sci-fi goggles, separating it from other, narrower problems where human intelligence is decomposable into a set of representations and algorithms that admit of automation, and clarifying the actual landscape so that other, serious scientists and interested parties can productively discuss the roles of computation and human thinking in society.

It’s a myth that the dividing line between man and machine is essentially temporary, and that all problems once thought solely in the purview of human intelligence will eventually yield computational solutions. We’re going backward with the Turing Test, for instance, as the latest Loebner Prize competition demonstrated: Eugene Goostman simulates a vapid, sardonic 13-year-old Ukrainian speaking broken English to fool a few people for a few minutes (who no doubt are performing backbends to reduce their own standards of conversation with actual persons, in hopes of a history-making moment with a mindless chatterbot).

There’s no real response to what I’ve just said. I mean, no one seriously thinks computers are making substantial let alone exponential progress on actual natural language interpretation or generation in non-constrained domains — yet by sleight of hand, ignorance, overenthusiasm, blurry vision, dyspepsia, or what have you, provision of seemingly related examples (chess, driverless cars, Google Now for recommendations, or Siri for voice recognition, perhaps) keeps the parlor tricks alive, and claims of inexorable progress continue.

There is progress on computation, of course, but it has little to do with the machines themselves acquiring actual intelligence. It has everything to do with researchers in computer science and related fields continuing to use their own creative intellects to find clever ways to represent certain tasks that admit of algorithmic decomposition, such that the tasks can be mapped onto digital computer hardware. Our intelligence itself does not appear to be so reducible.

And so these discussions are a tempest in a teapot. Who’s really preparing for the robot future? And here let’s avoid equivocating between an economy increasingly dominated by dumb automation, and a world inhabited by truly intelligent digital beings. Who’s worried about the latter? I mean, beyond relatively uninformed, self-styled futurists (that most coveted of social roles), or nose-against-the-glass software junkies in the Valley, binge-watching digitally-remastered editions of Blade Runner, and speculating about “dating their operating systems,” as director Spike Jones offered up, memorably, complete with the sultry voice of Scarlett Johansson in his 2013 hit movie Her.

Sci-fi fantasies dressed up as serious “What if?” discussions are not new, of course. Wormholes have a basis in actual science (but probably are still not possible). I’d rather see a perpetual shouting match about the coming of time travel, like back in the Star Trek days. It’d be sci-fi still, but actually closer to reality than the imminent-smart-robots palaver today. There’s a discussion to be had about computation in society, certainly, but it’s not the silly one we’re having.

Image by Anders Sandberg from Oxford, UK (Jules) [CC BY 2.0], via Wikimedia Commons.