Physics, Earth & Space

Physics, Earth & Space

Bang for the Buck: What the BICEP2 Consortium’s Discovery Means

It is all over the Internet — the BICEP2 consortium discovery announced on Monday that is being hailed as next year’s Nobel Prize in Physics, the confirmation of two different fundamental cosmological theories at one blow: Einstein’s General Theory (gravity) and Alan Guth’s Inflationary Cosmology. Or is this all hype?

Why does confirming Einstein count as new? Doesn’t everybody already accept Einstein’s general theory? Well, yes and no. Einstein’s theory has made some profound predictions about the curvature of spacetime that were vindicated by Eddington’s 1919 eclipse expedition, but it also predicted the existence of gravity waves, undulations of spacetime, gravitons that are emitted, say, from orbiting neutron stars. Unfortunately, no one has ever observed gravitons, despite an initial flurry when Joseph Weber invented the gravity wave antenna in the 1960s.

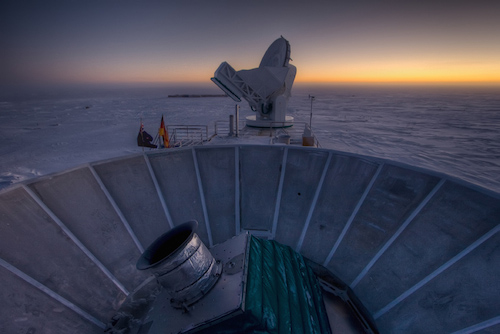

Poor Joe also invented the laser, but in both cases the honor went elsewhere, which in the case of gravity waves is now funding two national laboratories in Louisiana and Washington State that have exquisitely balanced mirrors to measure the subtle tug of a passing gravity wave. Needless to say, the graviton is an elusive prey, and the budget spent looking for it has now exceeded a billion dollars with plans to put it into space to increase the sensitivity. So it would be a major coup indeed if a lowly telescope in the bitter cold of Antarctica has finally captured the beast.

But the inflationary claim is more spectacular because it was even more unexpected. Inflation was Alan Guth’s attempt to explain why the early universe after the Big Bang was so very “flat,” which is to say, why the force of the explosion matched the force of gravity to one part in 10^60. To put this in perspective, there are about 10^80 protons in the visible universe, so 10^20 protons, about one grain of sand, would have unbalanced the Big Bang, causing it either to recollapse into a black hole, or to expand so fast as to never form stars and galaxies. One grain of sand more, one grain less and we would not be here.

This cosmic balancing act caused many theorists to search for a reason, a cause that would remove the “fine tuning” of the Big Bang. Guth’s solution was to have the Big Bang operate like a giant pressure cooker whose lid has just been forcibly removed. All the water, all the spinach in the pot would instantly boil, creating a volcano of spinach dripping off the ceiling. This is what physicists demurely call a “spontaneous phase change.”

Guth wants spacetime in the very early Big Bang to spontaneously boil and expand faster than the speed of light, which would have the side benefit of making everything “flat” afterwards, everything covered in an equal amount of spinach. Nearly all cosmologists accepted this model in one form or another, preferring it to the increasingly disturbing “fine tuning” argument employed by advocates of intelligent design among others.

But Guth’s speculation has proved hard to demonstrate. Numerous theoretical problems have sent it back to the drawing board, and it is now in its third or fourth iteration. One theorist bemoaned that the inflation model now needs tuning also, perhaps as great as 1 part in 10^100, making the cure worse than the disease. So it seems as if the model will die a death of a thousand cuts if we don’t give it a data transfusion soon. That is why so many people are seeing this BICEP2 result as Nobel Prize material, because it not only rescues the favored model of cosmologists, but also saves the jobs of a thousand people at two national labs who are having to justify their expensive failure.

What exactly did the BICEP2 telescope observe? Well just to clear the air, it neither measured gravitons nor inflatons. What it actually measured was the polarization of light from the Big Bang, the Cosmic Microwave Background (CMB) radiation. Just as the blue sky is light from the sun reflected by air molecules, so also the heavens are a deep shade of red from the light emitted by the Big Bang, which has cooled for some 13 billion years. And just as the blue sky is polarized — which birds use to navigate and which you may be able to observe with the right kind of sunglasses — so also the CMB is polarized. The BICEP2 telescope observed this polarization just as you would by rotating your sunglasses by ninety degrees and observing the bright-dim changes.

Now comes the interesting part. What does this observation mean? It could mean that space is filled with magnetic fields, and that like the polarizing filters in your sunglasses, the CMB becomes more polarized the more magnetic fields it traverses. In fact, this is what was observed in the experiments that preceded BICEP2, with strong polarization being attributed to galaxies. That is why BICEP2 was looking in a part of space that had no galaxies.

Or the polarization might be a result of needle-shaped dust that reflects the CMB differently depending on angle. Again, this was seen in the experiments preceding BICEP2 so the telescope was pointed away from most of the galactic dust lanes. Aerosols in the air might cause the effect, just as blue sky gets polarized. So BICEP2 was stationed at the South Pole to get the best view of the out-of-galactic, out-of-contamination, away-from-galaxy view of the CMB.

When the data was processed, the signal was still there, and stronger than the scientists had expected. This led to the hope that perhaps it wasn’t an artifact of matter in the universe, perhaps it was actually a property of the CMB itself.

Turning to theorists, the team looked at whether this effect was predicted by the models. Reconstructing the probable scenario, somebody dragged up a gravity wave model of the early universe and said that if the matter was compressed in one direction, say by a gravity wave, then the CMB light would get preferentially polarized. But the effect was way too small to explain the data. Then somebody else had a brainstorm and suggested that inflation would flatten the background but not the foreground, effectively making the signal stand out or become amplified. Since all these models have three or four dials, the theorists feverishly got to work and found a setting of the dials that matched the data. (One of common pitfalls of all modelers is to confuse curve fitting with prediction — to confuse the assumptions of the model with the conclusions of the fit.)

Now the BICEP2 consortium had the opposite problem. They had first struggled with too big a signal for the theory, and now they had too important a theory for the signal. They spent another year double-checking, trying out alternative explanations, waiting for confirmation. The replacement for BICEP2, the Keck, went into operation and when it saw the same signal, they felt confident enough to release their paper.

Isn’t this the very model of propriety in science — careful measurement, skeptical modeling, confirmatory measurements, cautious publication? Why then do I give this paper a 1 in 10^60 chance of being correct?

Two independent models that have never been confirmed are both needed to process the data and arrive at an explanation. Two extremely unlikely chance coincidences — since the two models are not related to each other — are needed to produce the effect. Multiple dials in each unconfirmed theory having unconstrained parameters have to be adjusted to get the model to agree. There are just too many ways in which the assumptions of the modelers are unconsciously affecting the results for this to be believed. As Richard Feynman said about physics, “The first principle is that you must not fool yourself, and you are the easiest person to fool.”

In addition to the sheer improbability of getting two explanations working, is the improbability of inflation working on its own. Remember that inflation was invented to solve the metaphysical problem of fine-tuning, not because there was any justification for an “inflation field” or an “inflaton” particle. Many theorists are abandoning this inflation field as being too simplistic, believing that a better solution would solve the thorny question of “quantum gravity.”

At the fiftieth annual Relativistic Astrophysics symposium last week, begun by John Wheeler in his revival of cosmology in the 1960s, Roger Penrose opined that the real unresolved question in Big Bang cosmology was the disparity between the high-entropy particles and the low-entropy gravity, a disparity that only gets worse with inflation. He and others suggested that the “phase transition” that occurred in the Big Bang was a collapse of several higher dimensions down to the four we know today, a collapse that presumably swallowed all the excess entropy in the gravitational field, including all the gravitons. Such a theory would not fit the BICEP2 model very well at all. So it is one thing when data support a new and controversial theory, but quite another when the data support a decrepit theory on life support.

But hasn’t the BICEP2 consortium looked at all the alternatives and found them wanting? Ahh, this is the great conceit of modelers, that they have included all the physics. As a famous Secretary of Defense once said, “There are known unknowns; that is to say, there are things that we now know we don’t know. But there are also unknown unknowns — there are things we do not know we don’t know.” It is those unknown unknowns that are the real gremlins in the model. This is why astrophysicists usually wait until the signal is five sigma above the noise, the better to beat the gremlins with. In this data analysis, the BICEP2 consortium doesn’t know what the dust density is, and therefore doesn’t know how much of the signal is due to intervening matter. What they say in their paper is very revealing:

The main uncertainty in foreground modeling is currently the lack of a polarized dust map. (This will be alleviated soon by the next Planck data release.) In the meantime we have therefore investigated a number of existing models and have formulated two new ones….we find significant correlation and set a constraint on the spectral index of the signal consistent with CMB, and disfavoring synchrotron and dust by 2.3? and 2.2? respectively.

In plain talk, they just said they guessed as to what the dust effect would be and then found a 2.2-sigma signal above that assumed noise. That means if the dust were to be, oh, three times as bright as they expected, their signal would disappear. From my own contacts in the astrophysics field, I know that magnetized dust is even more polarizing than regular dust, which for them is an unknown unknown. Shouldn’t they have waited for a five-sigma effect? Or at least, waited for the Planck data release to give them a dust model? Why the hurry?

Because the measurement of CMB polarization is a crowded field, and they wanted to be the first to publish. Then all that noise about waiting a year for confirmation really is about setting an impression rather than setting a precedent. They wanted a ground-breaking theory; they wanted to be the first to publish; they didn’t want to wait for necessary background data; they wanted splash and that is what they got.

Nothing in this paper inspires confidence in the results, but rather seems to highlight the hubris that is at the heart of 21st-century big science.