Evolution

Evolution

When Good Science Turns Bad: Some Potentials for Ugly Distortion

The layman tends to think of “science” as some kind of uniform knowledge-producing machine: pour ingredients into the scientific method, and out come objective, scientific facts. Real science is much more human, sometimes all too human. Some of the most hazardous biases in science are those the scientists are not even aware of. A couple of examples surfaced in recent journal articles. Both have the potential to either generate incorrect conclusions, or to distort the image of “good science.”

Impacted Teeth

Consider, for example, a metric called “journal impact factor.” According to Science Insider, a policy blog from Science Magazine, it was a nice idea that turned rogue:

Journal impact factors, calculated by the company Thomson Reuters, were first developed in the 1950s to help libraries decide which journals to order. Yet, impact factors are now widely used to assess the performance of individuals and research institutions. The metric "has become an obsession" that "warp[s] the way that research is conducted, reported, and funded," said a group of scientists organized by the American Society for Cell Biology (ASCB) in a press release. (Emphasis added.)

Even worse, the algorithm that calculates it is flawed:

For example, it doesn’t distinguish primary research from reviews; it can be skewed by a few highly cited papers; and it dissuades journals from publishing papers in fields such as ecology that are cited less often than, say, biomedical studies.

For these and other reasons, there has been an insurrection of sorts:

More than 150 prominent scientists and 75 scientific groups from around the world today took a stand against using impact factors, a measure of how often a journal is cited, to gauge the quality of an individual’s work. They say researchers should be judged by the content of their papers, not where the studies are published.

In a perfect world, that’s how science should be judged, shouldn’t it? But if it were, there would not have been this high-profile protest. The situation sounds analogous to Martin Luther King’s ideal that persons should be judged by the content of their character, when we all know that racial policy remains a major controversy half a century later.

What the insurrection shows is that the practice of science is in fact embroiled in controversy: good science can be neglected, while poor science can be trumpeted merely by the journal it is published in, or by how many times it is cited. What kind of metric is that? That was never the intent, yet huge issues like “funding, hiring, promotion, or institutional effectiveness” hang on it, according to the American Society for Cell Biology.

The San Francisco Declaration on Research Assessment, or DORA, was framed by a group of journal editors, publishers, and others convened by the American Society for Cell Biology (ASCB) last December in San Francisco, during the Society’s Annual Meeting. The San Francisco group agreed that the JIF, which ranks scholarly journals by the average number of citations their articles attract in a set period, has become an obsession in world science. Impact factors warp the way that research is conducted, reported, and funded.

What began as a tool for librarians has become a “powerful proxy for scientific value.” Notice that this affects “world science” — it is a big deal.

The response of Thomson Reuters was startling — and not encouraging. It sounds like there is no quick fix forthcoming:

No one metric can fully capture the complex contributions scholars make to their disciplines, and many forms of scholarly achievement should be considered.

Soft Software Wares

Another potential “distorter” of science is reliance on black-box software. Modeling in science is frequently done by software these days, but software is not peer reviewed. Who checks the validity of software? Who knows if the pretty charts and graphs it puts on the screen tell the truth?

This problem was discussed in another paper in Science, “Troubling Trends in Scientific Software Use.” A team of six primarily from Microsoft Research decided to take a look at how scientists employ software, and found out something troubling indeed: it is very unscientific! Many latch onto particular modeling tools like teens sharing apps.

One might assume that two principal scientific considerations drive the adoption of modeling software: the ability to enable the user to ask and answer new scientific questions and the ability of others to reproduce the science. Alas, diffusion innovation theory defies such objectivity, indicating the importance of communication channels, time, and a social system, as well as the innovation itself. An individual’s adoption of an innovation relies on awareness, opinion leaders, early adopters, and subjective perceptions. Scientific considerations of the consequences of adoption generally occur late in the process, if at all.

This may be appropriate when deciding which smartphone application one uses. But we must hold scientific inquiry and adoption of scientific software to higher standards. Does use of modeling software conform to basic tenets of scientific methods? We describe survey findings suggesting that many scientists adopt and use software critical to their research for nonscientific reasons. These reasons are scientifically limiting. This result has potentially wider implications across all disciplines that are dependent upon a computational approach.

As a test case, the authors surveyed four hundred ecologists who use “species distribution modeling” (SDM) software. To their chagrin, they found that not a few respondents use it because their colleagues recommended it, or because the company had a good reputation, or because they like the “click and run” interface.

Most people, in some form, "trust" software without knowing everything about how it works. More complex modeling software is a special case, particularly when the answer cannot be checked without the software, and there is thus no ability to validate its output. We have reason for approaching scientific software with healthy circumspection, rather than blind trust.

Even more troubling was the fact that many of the scientific respondents were “unable to interpret the original algorithms, much less understand how they were implemented in the distributed code.” To them, the software was a black box: input data, output graph. Who knows what machinations the programmer embedded in the code? Did the developer understand the algorithms?

Peer review of software, if it were done, would require highly technical knowledge, so here is another troubling issue, with wide ramifications and no quick fix. The authors make some recommendations, such as,

A standard of transparency and intelligibility of code that affords precise, formal replication of an experiment, model simulation, or data analysis, as well as peer-review of scientific software, needs to be a condition of acceptance of any paper using such software….

Societally important science relies on models and the software implementing them. The scientific community must ensure that the findings and recommendations put forth based on those models conform to the highest scientific expectation.

But “Changing the status quo will not be easy,” they admit. No kidding.

Applications

Where have you heard of scientific modeling lately? Climate change, for one, is heavily dependent on models. Think of the billions of dollars at stake, and the possible impacts on millions of lives if drastic political actions proposed by some were to be implemented.

Where have you heard of scientific modeling lately? Climate change, for one, is heavily dependent on models. Think of the billions of dollars at stake, and the possible impacts on millions of lives if drastic political actions proposed by some were to be implemented.

Yet those policies are dependent on models instantiated in software that might not be valid. Who watches the developers? Hardly a month goes by without some new realization that climate models have not adequately taken into consideration cloud reflectivity, absorption of carbon by rainforests, sequestration of carbon in the sea floor, or other factors.

Another field highly dependent on models is Darwinian tree-making. Phylogenetics is rife with models that try to crunch vast amounts of data into simple diagrams that supposedly show ancestral relationships between plants and animals.

Those who know the literature may be aware of algorithmic issues such as long-branch attraction that can distort results, even without the software. But who ensures that the software even implements the algorithms correctly? Along the modeler’s way, various shortcuts or compromises like “rate heterogeneity” can force uncooperative data to fit expectations. The potential for self-deception is huge.

All is well, though, when the phylogenetic tree gets published in a “high-impact journal.”

Recall how Darwin’s best-known book had high "impact" compared to Mendel’s paper in an obscure Austrian journal, leaving Mendel’s work virtually ignored for half a century. That the subjective factors reviewed here are not new should remind us that human frailties and biases are inevitable inputs to the scientific machine. If not scrutinized by debate and critical discussion, the machine can create a “scientific consensus” that ignores the small errors while sweeping on to the grand fallacy.

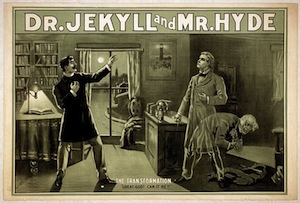

Image: The Strange Case of Dr. Jekyll and Mr. Hyde/Wikicommons.