The Science of Denial

Scientists sometimes find themselves wishing things were different. In one sense that’s a thoroughly unremarkable observation. After all, scientists are human, and humans have always found themselves wishing things were different.

But what if some of the things scientists wish were different are the very things they have devoted themselves to studying? In other words, forget about salaries, teaching loads, and grant funding. What if some scientists want the brute facts of their own field of study to be other than what they really are?

As odd as it may seem, particularly to non-scientists, that tension between preference and reality has always been a part of doing science. Like everyone else, scientists don’t just have ideas — they favor them… even promote them. And for scientists, as for everyone else, sometimes those cherished ideas are just plain wrong.

For decades now, a growing minority of scientists have argued that the standard explanations of biological origins are prime examples of this — cherished ideas that are spectacularly wrong. That raises an interesting question. If these ideas are really so wrong, why do so many experts affirm them?

Some, of course, would call this a false paradox. By their way of thinking, the mere fact that so many experts accept these ideas shows that they can’t be badly wrong. But paradigm shifts do happen in science, and every time they do the world is treated to the memorable spectacle of lots of experts being badly wrong.

Even experts have ways of avoiding reality. When it comes to the improbabilities that plague naturalistic origins stories, the avoidance often takes the form of what I’ve called the ‘divide and conquer’ fallacy. [1] It works like this. Instead of asking what needs to be explained naturalistically, you concentrate on what can be so explained. Specifically, you look for some small piece of the real problem for which you can propose even a sketchy naturalistic solution. Then, once you have this mini-solution, you present it as a small but significant step toward the ultimate goal of a full credible story.

But the only way to tell whether small steps of this kind are taking us toward that ultimate goal or away from it is to examine them carefully in the context of the whole problem. If that analysis doesn’t give the intended result, it’s tempting to skip it and end on a happy note.

Consider the work that Lehmann, Cibils, and Libchaber recently published on the origin of the genetic code. [2] By one account they have “generated the first theoretical model that shows how a coded genetic system can emerge from an ancestral broth of simple molecules.” [3]

That would be huge alright. And huge claims always call for caution.

Let’s start with some background. The “broth” that Lehmann et al. are thinking of is sometimes called the “RNA world” — a hypothetical early stage in the evolution of life when RNA served both the genetic role that DNA now serves and the catalytic role that proteins now serve.

In modern life, most RNA performs a cellular function analogous to the function of the clipboard on your computer. It enables sections of ‘text’ to be lifted from a larger ‘document’ for temporary use. These sections are genes and the document is the genome. By providing in this way temporary working copies of genetic text, RNA contributes to the central purpose of genes, which is to provide the sequence specifications for manufacturing the functional proteins that do the molecular work of life.

This is where the genetic code comes in, and with it the daunting problem it poses for naturalistic accounts of origins. The key thing to grasp is that genes are as unlike proteins as successions of dots and dashes are unlike written text. Only when a convention is established, like Morse code, and a system put in place to implement that convention, can dots and dashes be translated into written text. And then, only meaningful arrangements of dots and dashes will do. Likewise, only a system implementing a code for translating gene sequences (made from the four nucleotides) into protein sequences (made from the twenty amino acids) can enable genes to represent functional proteins, as they do in life.

What makes it so hard to imagine how this system could have evolved is the need for it to be complete in order for it to work, coupled with the need for it to be complex in order to be complete. To agree that “•” stands for e is relatively simple, but not in itself very helpful. Only when a whole functional alphabet is encoded in this way do we have something useful. Similarly, it seems that an apparatus for decoding genes, and thereby implementing a genetic code, would have to physically match each of the twenty biological amino acids to a different nucleotide pattern. Whatever else that apparatus might be, it can’t be simple. Moreover, it can’t be useful without some meaningful genes (encoding useful proteins) to go with it.

This realization is enough to make even a committed materialist give up on the idea of an evolutionary explanation. Evolutionary biologist Eugene Koonin has. In his words, “How such a system could evolve is a puzzle that defeats conventional evolutionary thinking.” [4] Accordingly, he proposes the unconventional solution of an infinite universe (a multiverse) in which even the seemingly impossible becomes certain.

I think it’s fair to say that most biologists are uncomfortable with Koonin’s proposal. Part of what bothers them is the tacit abandonment of more conventional solutions, as though these have no hope of ever succeeding. In the wake of this, Lehmann, Cibils, and Libchaber are, in effect, refusing to throw in the towel, and that merits attention in itself.

Instead of making the universe bigger, they propose a way of making the genetic code smaller, hoping that this downsized version might feasibly arise in a conventional evolutionary way. But there’s a risk. Their efforts to simplify could easily lead to oversimplification.

They presuppose an RNA-world endowed with two kinds of tRNA molecules, each of which has dual functional capacities: at one end they attach an amino acid, and at the other they pair with a specific base triplet (codon) on an RNA gene. Their world also has steady supplies of two kinds of amino acid, at least one kind of RNA gene that restricts itself to the two codons recognized by the tRNAs, and “a ribosome-like cofactor” that cradles the complex formed between the tRNA that caries the new protein chain and the codon to which it is paired.

The immediate question is, how could a world that has never encoded proteins have done so much preparation to become a world that does encode proteins? We seem to be left with the familiar alternatives of extraordinary improbability or guided design. Here it has to be conceded that Koonin’s proposal is at least commendably frank, in that it acknowledges the improbabilities. Lehmann et al., like everyone else, prefer not to go there.

Maybe that’s because, like everyone else, they find themselves between a rock and a hard place. Since the modern system for implementing the genetic code is way too complicated to have appeared by accident, they know they need to look for not just a simplification, but a radical simplification. But if it’s hard to explain how even a modest simplification could leave the basic function intact, imagine how hard it becomes for a radical simplification.

Their efforts to find a workable compromise between sterile simplicity and complex functionality are both laudable and instructive, but unsuccessful nonetheless.

Their simplified proteins are built from two amino acids instead of twenty. People have tried to fish out life-like proteins from pools of random chains made from just a few amino acids, but nothing impressive has ever come of it. That’s not surprising when you consider how fussy real-life proteins are about their amino-acid sequences. The idea of forcing them to hand over eighteen of their constituent amino acids without so much as a complaint is just plain unrealistic.

Lehmann, Cibils, and Libchaber attempt to push their proteins even further. Their translation mechanism has an extraordinarily high error rate, resulting in about one wrong amino acid for every six added to a new chain. And that’s under ideal conditions. Things get much worse if the conditions deteriorate.

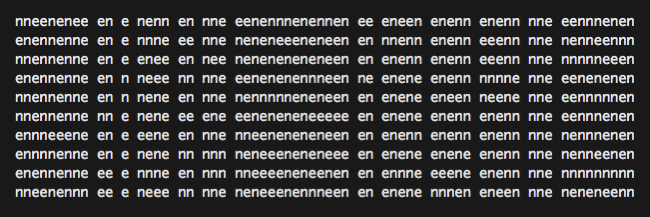

Let’s experiment with this. If you haven’t read the title of their paper, hold off and we’ll see if you can read a version of it that has been simplified along the lines of their proposal. Protein functions would have to be remarkably relaxed about protein sequences for their simplifications to have worked in early life. The test will be to see whether you are comparably relaxed about spelling when you read.

The most common vowel in the title of their paper is e, and the most common consonant is n. To mimic their proposed simplification of proteins, let’s replace all the vowels in their title with e and all the consonants with n, randomly mistaking vowels and consonants about one sixth of the time. The random errors make many versions of the title possible, but you don’t have to see many examples to convince yourself that this isn’t going to work:

This isn’t meant to be a proof, of course, just an illustration. It approximates the scale of simplification that Lehmann et al. have proposed for protein sequences, and in so doing it provides very reasonable grounds for suspecting they have oversimplified. Something closer to proof can be had by examining how fussy real protein functions are about protein sequences. That whole field of work, as I see it anyway, seems to confirm the suspicion.

So in the end, Lehmann, Cibils and Libchaber seem to have taken us a step further from a naturalistic explanation for life rather than a step closer. Some people will be more pleased with that conclusion than others, and that’s okay.

From the standpoint of science, every step is progress.

This article is crossposted from Biologic Institute’s Perspectives blog.

References:

[1] Perspectives, 1 April 2009

[2] Lehmann J, Cibils M, Libchaber A (2009)

[3] ScienceDaily

[4] Koonin EV (2007)